Dec 8, 2023

TabbyML Newsletter #4: 🏆Top in open source, Retrieval-augmented code generation, 🏢Enterprise preview, 💬User testimonials, and more...

Hello Tabby friends👋,

It's such a spectacular and exciting time for the growing world of AI🌟! With so much happening – from big news📰 to major announcements📢 – we are eager to share the top highlights and the latest from Tabby. Here we go 🚀🚀🚀

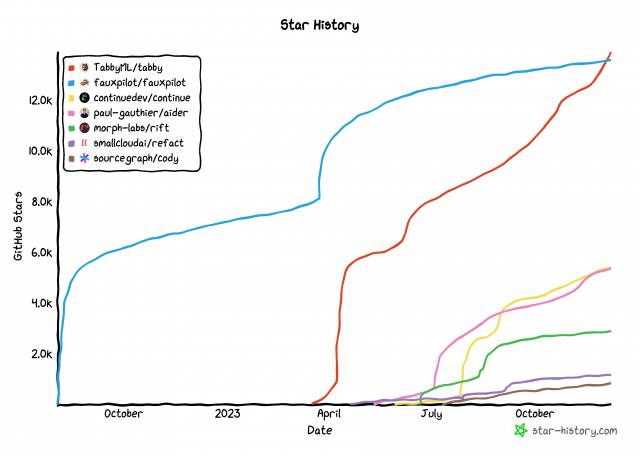

📈 Top in Open Source!

We started TabbyML with a vision to democratize AI-enhanced developer tools with an open ecosystem. Today, we're thrilled to celebrate Tabby as the most loved open source coding assistant product 🎉. Your support and enthusiasm have been vital to this journey❤️.

Looking ahead, we are poised to delve even further into the developer lifecycle, and innovate across the full spectrum. At TabbyML, developers aren't just participants — they are at the heart of the LLM revolution.

Join our vibrant community where your ideas and feedback help shape the next-gen dev tools!

🔥 What's New?

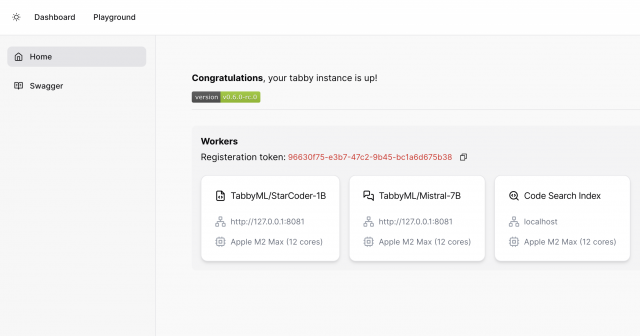

Tabby has come a long way in the past months! The latest v0.6.0 release marks the beginning of Tabby's Chat Playground and a suite of enterprise-friendly features. Here are more:

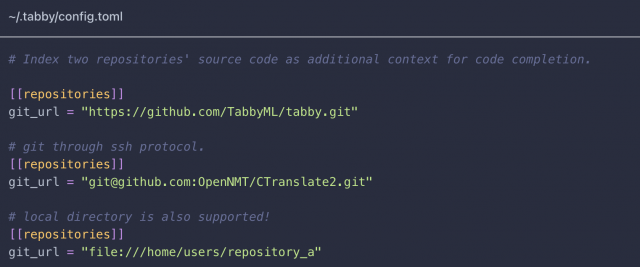

📁 Retrieval-Augmented Code Generation

Tabby now gains a deeper understanding of your private repository! With a simple config setup, Tabby will cleverly analyze variables, classes, methods, and function signatures (supporting 8+ programming languages and continually expanding!) within your repo to offer project-native code suggestions. Discover more in our blogpost!

☁️ Tabby on Modal GPU

☁️ Tabby on Modal GPU

No worries if you lack local GPUs for larger models. Tabby has supported smooth integration with Modal's cloud serverless cloud GPU and you can enjoy a more cost-effective pay-as-you-go plan!

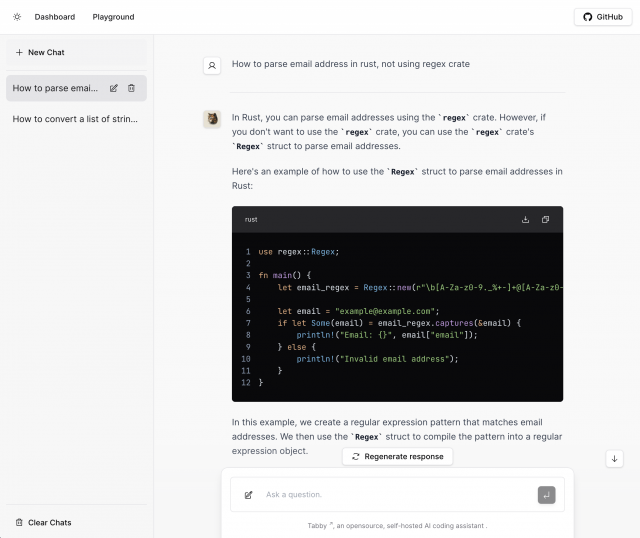

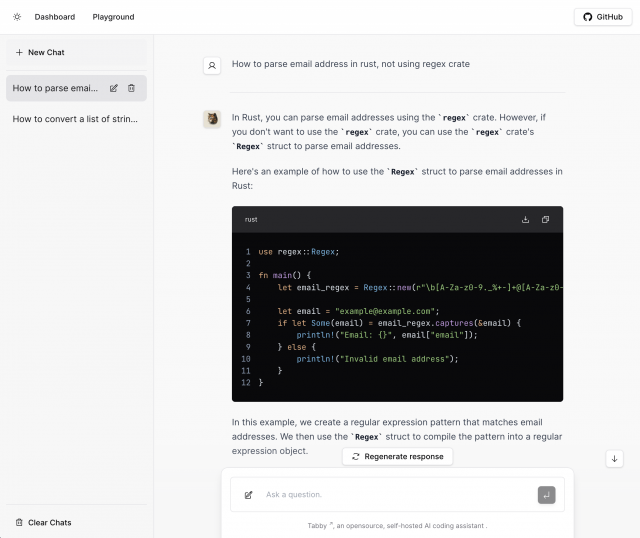

💬 Chat Playground

Chat Playground has been the secret gem among many power users for a while, quietly existing under the radar. Now it's finally coming to a fresh makeover with conversation history support. So, what's the next burning coding question you're itching to ask Tabby?

🐱 Enterprise Features (Preview)

We're advancing Tabby with a suite of features designed for enterprise scenarios. Key among these is the introduction of built-in distributed orchestration. More functionalities, such as User management / SSO / Secret Masking / DORA metrics are being actively developed!

If you're considering scaling out Tabby to more than a single node for your entire team, we invite you to our office-hour to discuss the challenges you are facing!

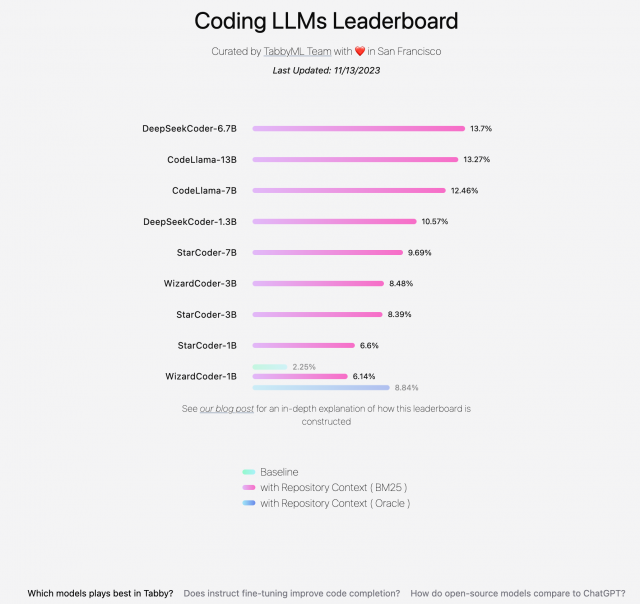

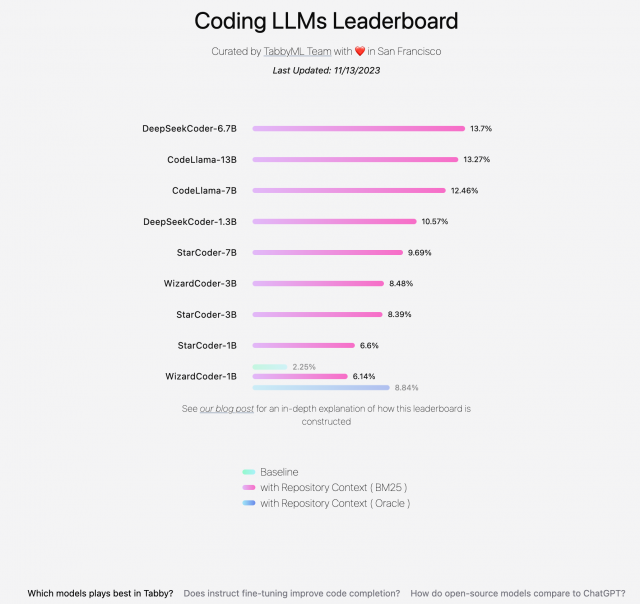

We firmly believe in the continuous advancement in open source coding LLMs. However, having a reliable benchmark to monitor model improvement is critical for selecting the most suitable model for users. To demystify this process, we're excited to introduce the Coding LLMs Leaderboard.

Read more about our thoughts on coding evaluation:

❤️ Voice of users!

We've observed a remarkable surge in teams adopting Tabby in their daily dev stack. We deeply appreciate user feedback and support, and would love to highlight a few testimonials to express our gratitude. Here are what they say about Tabby:

- "I have tested quite a few opensource code completion plugins and your project is by far the most successful. "

- "I am really enjoying this software, and wanted to use it instead of copilots entirely."

- "Have been using this project for a week now. I must say its really good."

- "I want to thank this dev team for an impressive piece of work! I'm looking at Tabby for a few reasons, number one being because of security requirements."

🫶 Love from the wild!

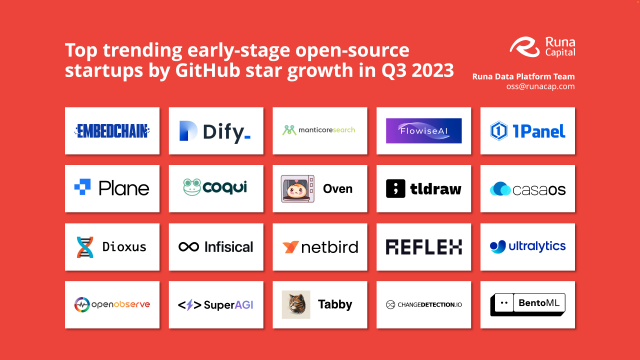

😽 Tabby is honored to be the only AI coding assistant project to make the top trending OSS startups in 2023 Q3 by Runa Capital!

📝 Interested in deploying Tabby on AWS? Check out Joumen Harzli's sharing on deploying Tabby on AWS ECS with Terraform!

CONNECT WITH THE GROWING TABBYML COMMUNITY! JOIN US ON:

- 🌎 Slack - connect with the TabbyML community

- 🎤 Twitter / X - engage with TabbyML for all things possible

- 📚 LinkedIn - follow for the latest from the community

- ☕️ GitHub - star the project, track issues, and submit requests